Data Quality Management Practical Tests and Scripted Solutions

There are several simple steps you can take to test your data's quality.

Ideally, this should be done immediately after defining a file in Arbutus Analyzer and before beginning your analysis. Running the tests below will document the results in the Command Log. It's a best practice to use a script to execute your tests and have the script direct the results to an output file. This can become part of your workpapers to demonstrate that the tests have been performed.

First Level Testing

Control Totals - Ask your data provider to inform you how many records were in the source data and what the control or hash totals were for numeric fields:

- Count command to document the record count

- Total command on key numeric fields for control/hash totals

Uniqueness of Records

It's possible that a single original record can be written more than once to a file due to processing errors.

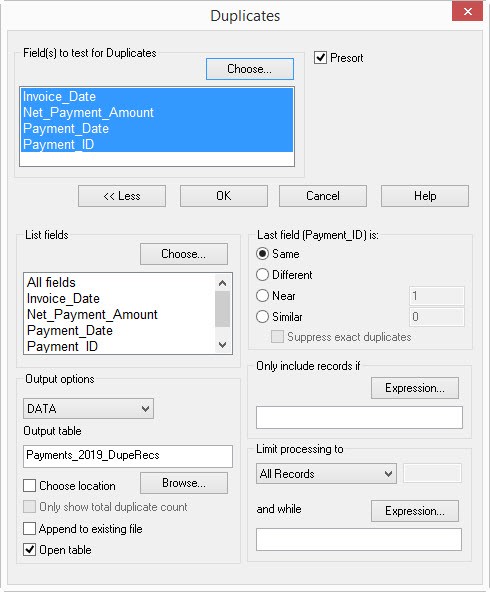

To test for record uniqueness, use the Duplicates command, selecting all the physical fields in the "Field(s) to test for Duplicates" section of the command dialog:

Duplicates ON <field list> To "Payments_2019_DupeRecs" Open Presort

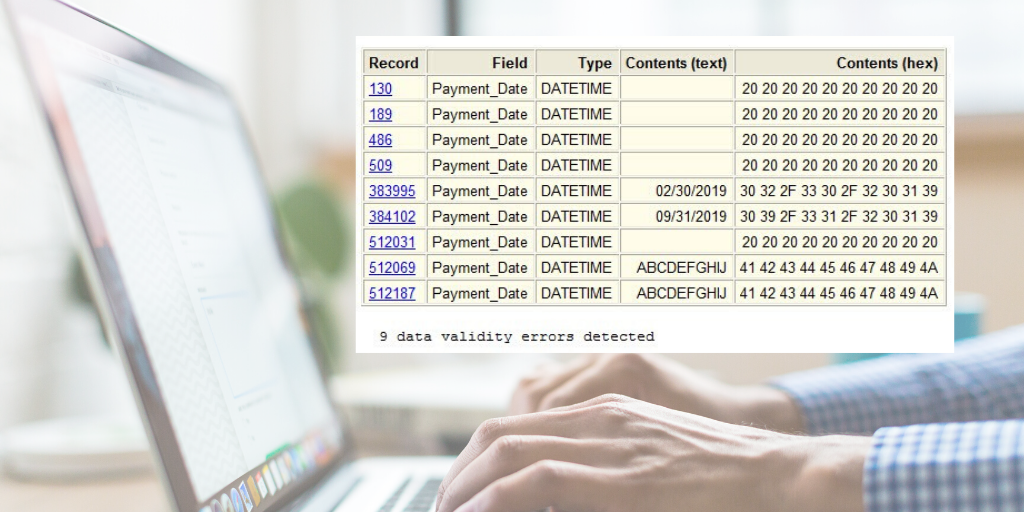

Data Corruption

Data can become corrupted in many ways as it is being processed. Performing an extraction from a database, zipping up a file, and even sending it as an e-mail attachment are activities that can cause corruption. Numeric and date fields are particularly vulnerable.

Run the Verify command against all the physical fields. The output will tell you if any evidence of corruption has been detected:

- Non-numeric characters in numeric fields

- Invalid/blank dates

- Invisible/non-printing characters (such as tabs)

Field Level Testing

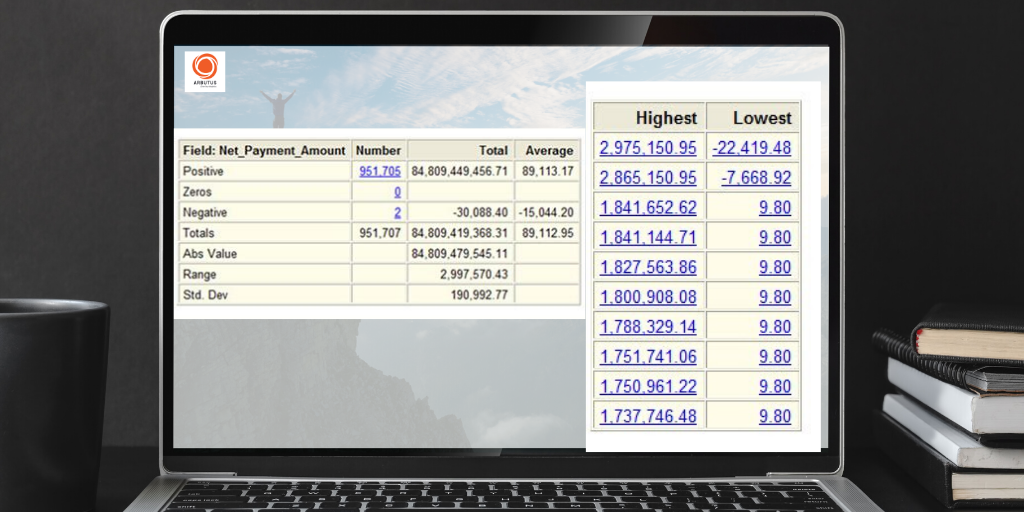

Numeric and date fields are the prime candidates for quality testing. They are indicators of the scope (time period) and materiality of the data. Key character fields should also be tested.Numeric Fields

The Statistics command provides a wealth of information for both numeric and date fields. For numeric fields, it can rapidly display the following results:

- Number/Total/Average

- Number/Total/Average of positives

- Number of zeros

- Number/Total/Average of negatives

- Absolute total

- Range

- Standard deviation

- Top X

It's always useful to review the Top X results to help you make your decision to test for outliers.

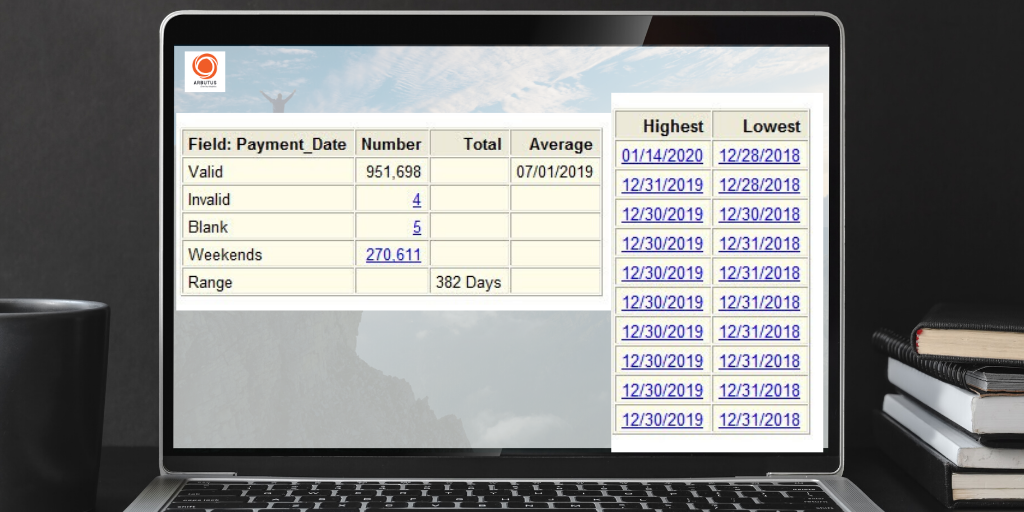

Date Fields

The Statistics command again provides significant results:

- Blank dates

- Invalid dates

- Weekend dates

- Range

- Top X dates

Invalid dates are those which contain content that does not conform to the field's date format. Details of the invalid/blank dates' contents can be displayed by the Verify command. Weekend dates may be of interest to tests of manual journal entries, where weekend or holiday transactions are considered high-risk. The Range result will immediately confirm the span of the dates; a range greater than 365 will tell you that the records cover more than a one-year period. Reviewing the Top X results can identify the existence of outliers. Test logically-related date fields with filters to determine if there are anomalies, such as an invoice date coming after a payment date in a given record. Use a filter with the Count command to document their existence: Count If Invoice_Date > Payment_Date

Testing and Scripted Solutions

-

Character Fields: Basic Tests

Due to their complex nature, character fields may require more than one command for testing. Some are straightforward, using the Count command with a filter that includes a function:

• Blanks >> Count If IsBlank(<field name>)

• Short lengths >> Count If Length(Alltrim(<field name>)) < 3 -

Character Fields: Format Testing

For systems with weak or nonexistent input validation, it's useful to test the format of a character field if the format of the data is expected to be consistent. Fields such as phone numbers, postal codes, and custom ID numbers are examples of such data.

The Format function is of great value as it reveals the underlying format of text fields. Used in conjunction with the Classify command, it will reveal how many formats exist in a given field. Format replaces numbers with "9", letters with "x" or "X" (depending on the case) and displays all other characters.

In this case, vendor data has been assembled from multiple sources. Although the data entry team was informed that only the digits should be entered, it's clear that the Phone_Number field data has been entered in multiple, inconsistent formats:

To determine the number and structure of the various formats, we can create a computed field and then run the Classify command to display how many formats are being used and to what extent.

First, we define a computed field that reveals the underlying format. Go to Edit >> Table Layout >> Add a New Expression. Name the new field Phone_Number_Format and click on the "f(x)" button to open the Expression Builder.

In the main dialog, enter Format (Phone_Number) in the Expression box to add the new field to the table layout. Back in the view, add the new field next to the Phone_Number field.

Next, run the Classify command against the new field. You can specify the "TOP" parameter to sort the results in descending order of frequency. The output will display all formats detected and their number.

Eleven different formats were found.

If you need to harmonize the phone numbers in order to search for duplicates or to match against another set of phone numbers, a computed field such as Phone_Number_Clean using the Include function would do the job:

Include (Phone_Number,"0~9")This would strip out all characters except numerics.

If the existence of phone numbers that were not ten digits needs to be followed up, the associated records could be extracted to another file using the following filter:

Length(Alltrim(Phone_Number_Clean)) <> 10 -

Scripted Solutions

Data quality testing should be conducted on every file prior to executing any analytics. To streamline the process, select the tests you need to conduct and embed them in an Arbutus procedure. Run the procedure every time you receive new data. This is far more efficient and consistent than executing the tests manually.

The script can prompt the user to select the target file from a dropdown list. It can also send all the test results to a table rather than leaving them in the log. This table can be exported to a spreadsheet or any other format that is appropriate for your workpapers. It's also possible to add the source table, field names, and the date/time of the tests to the output.

Sample Date Fields Output

Sample Numeric Fields Output

This has been split into two images in order to display the complete results.

-

Character Fields: Multi-File Testing for Valid Codes

Vendor numbers, product IDs, and employee IDs are examples of codes that should be tested for validity against a master file. Use the Join command to identify records (such as vendor payments) where the vendor number does not exist in the vendor master file.

1. Open the transaction file and start the Join command

2. Select the vendor master file from the "Secondary table" list

3. In the "Type of Join" dropdown, select "Unmatched primary records only"

4. In the "Primary key fields" list, select the vendor number field

5. In the "Secondary key fields" list, select the vendor number field

6. In the "Primary fields to output" list, select all the transaction fields you need in your results

7. Name the output file and click "OK." The output will contain all the payment transactions with no matching vendor number in the master file

Analytics By Business Process

Learn more about various analytics tests to meet business needs.

Analytics By Business Process